Apple's Worldwide Developers Conference (WWDC) starts today with the traditional keynote kicking things off at 10:00 a.m. Pacific Time. MacRumors is on hand for the event and we'll be sharing details and our thoughts throughout the day.

We're expecting to see a number of software-related announcements with a focus on Apple's efforts to infuse AI throughout its operating systems and apps. We'll be seeing Apple take the wraps off iOS 18, macOS 15, watchOS 11, visionOS 2, and more, although we're not expecting to see any hardware introduced today.

Apple is providing a live video stream on its website, on YouTube, and in the company's TV and Developer apps across its platforms. We will also be updating this article with live blog coverage and issuing Twitter updates through our @MacRumorsLive account as the keynote unfolds. Highlights from the event and separate news stories regarding today's announcements will go out through our @MacRumors account.

Sign up for our newsletter to keep up with Apple news and rumors.

Live blog transcript ahead...

7:44 am: With over two hours to go until the keynote, attendees are already gathering at Apple Park with Apple providing coffee and donuts to help get the day started.

7:47 am:

Can’t wait for you to join us at #WWDC24 this morning! pic.twitter.com/3bHzspURPH

— Tim Cook (@tim_cook) June 10, 2024

9:07 am: MacRumors is on the ground in Cupertino, and we'll be bringing you coverage all day and for the rest of the week!

9:11 am: OpenAI's Sam Altman is in attendance today as Apple is expected to announce a partnership to bring ChatGPT capabilities to iOS 18.

9:11 am:

Looks like the man of the hour is in Cupertino https://t.co/Ui3vsZtPVc

— Mark Gurman (@markgurman) June 10, 2024

9:31 am: Greg Joswiak once again teases an AI theme for today's keynote.

Tuning into #WWDC24 at 10am PT is the… intelligent thing to do! https://t.co/Yyg2uq2QmI

— Greg Joswiak (@gregjoz) June 10, 2024

9:41 am: Apple's online store has been taken down for now, although we're not expecting any significant hardware announcements.

9:49 am: The pre-event stream is live on apple.com with gently shifting neon colors.

9:57 am: Tim Cook is on stage within Apple Park, welcoming the crowd to WWDC 2024.

9:59 am: He's been joined by Craig Federighi.

10:01 am: The stream has morphed into an Apple logo. Here we go!

10:02 am: We're starting with a plane "somewhere over California" — an Apple-themed plane is filled with execs, including Craig, and they're parachuting into Apple Park with the names of all Apple's various OS platforms on the chutes.

10:03 am: Tim is welcoming everyone, and talking about its platforms. We're starting with Apple TV+.

10:04 am: "I'm proud to say that Apple TV+ has been recognized for delivering the highest rated originals in the industry for three years running."

10:06 am: They're showing a series of trailers for upcoming Apple TV+ shows, with stars including Brad Pitt, George Clooney, Harrison Ford, Natalie Portman and more. "Fly Me To The Moon", "Pachinko", "Silo", "Severance", "Slow Horses", "Lady in the Lake", "The Instigators", "Shrinking."

10:06 am: The schedule today: visionOS, iOS, Audio & Home, WatchOS, iPadOS, macOS. "Then we'll dive deeper into Intelligence."

10:07 am: We're starting with visionOS and Apple Vision Pro.

10:08 am: There are more than 2,000 apps created specifically for Apple Vision Pro.

10:08 am: visionOS 2!

10:08 am: "New ways to connect with your most important memories, great enhancements to productivity, and powerful new developer APIs for immersive shared experiences."

10:08 am: Updates coming to the Photos app.

10:09 am: "Spatial photos are even more powerful. Bringing life and realism to your favorite moments with family and friends. It's incredibly moving to step back into a treasured memory, and the rich visual depth of spatial photos, makes this possible."

10:10 am: "Now visionOS 2 lets you do something truly amazing with the photos already in your library with just the tap of a button. Advanced machine learning derives both a left and right eye view from your beautiful 2D image, creating a spatial photo with natural depth that looks stunning on Vision Pro, it's so magical to reach into the past and bring your most cherished photos into the future."

10:11 am: Mac Virtual Display is getting bigger, higher resolution and size, equivalent to two 4K monitors side by side.

10:13 am: There's new frameworks and APIs, including a Tabletop, and volumetric APIs. Easier to create new spatial content, and Canon is offering a new spatial lens for the Canon R7.

10:13 am: Spatial Videos will be able to be edited in Final Cut Pro and publishable in the new Vimeo app on Apple Vision Pro.

10:14 am: For Apple Immersive Video: "We've partnered first with Blackmagic Design, a leading innovator in creative video technology, to build a new production workflow consisting of Blackmagic cameras, DaVinci Resolve Studio, and Apple Compressor. These will all be available to creators later this year, and there's new Apple Immersive Video content on the way."

10:15 am: A new extreme sports series with Red Bull and a music video with The Weeknd. Coming to 8 new countries starting at the end of June and the rest in early July.

10:15 am: Now we've moved on to iOS 18.

10:16 am: Changes to the Home Screen.

10:17 am: Apps can be moved anywhere within the grid, not just at the top. Plus a new dark look for icons. New customization sheet, letting you tint logos to complement wallpaper (or select any color).

10:17 am: Control Center is getting updates too.

10:18 am: Control Center gets new colors, and new groups of controls for media playback or Home controls that can be swiped to.

10:19 am: Swipe down from the top right and you can continuously swipe to get to more control center screens. New controls gallery to customize the controls you see. Developers can include controls from apps, adding quick access to things like remotely starting a car.

10:19 am: Controls API also allows controls to be put on the lock screen, replacing the flashlight and camera buttons on the Lock Screens, as well as the Action Button on iPhone 15 Pro.

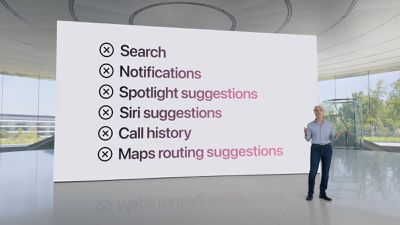

10:20 am: You can now Lock An App, requiring authentication to open an app. It also hides information from Search, Siri, and elsewhere.

10:20 am: Apps can also be hidden entirely and put in a hidden apps folder and locked away.

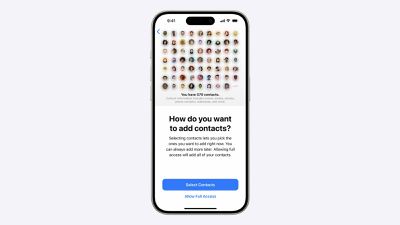

10:21 am: Adding more customization to privacy settings, like how to give access to contacts that are more restricted (giving access to only certain contacts, etc).

10:21 am: Also a new way to connect Accessories to be more seamless.

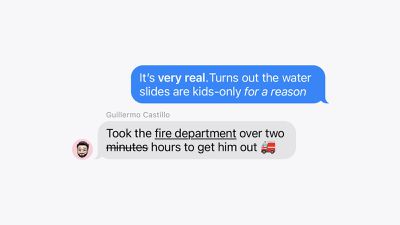

10:22 am: Updates coming to Messages, starting with Tapbacks.

10:22 am: Tapbacks can now be done with any emoji or sticker, and they've been given colors.

10:22 am: Scheduled texts to send later, plus text formatting. Bold, italicize, underline, or strikethrough.

10:23 am: New text effects, with some words and phrases suggesting effects automatically. Big, Shake, Bloom, Nod, and more.

10:23 am: Messages via Satellite, same tech as Emergency SOS via Satellite.

10:24 am: Send and receive messages, emoji, and Tapbacks over satellite. Also supports SMS texting over Satellite.

10:24 am: Updates to Mail, with messages automatically categorized via Primary, Transactions, Updates, and Promotions, to help prioritize emails.

10:26 am: New Tap to Cash feature, sending cash by holding phones together.

10:26 am: Maps gets topographical maps with trail networks and hiking routes.

10:27 am: Event tickets get a new guide, with information about the venue and maps to find your seat.

10:27 am: Game Mode coming to iPhone, minimizing background activity to keep frame rates high, plus more responsive connections to AirPods and wireless game controllers.

10:27 am: Redesign to the Photos app.

10:28 am: The app has been unified into a single view, with a photo grid at the top and library organized by theme below. The photo grid views the entire library. Can use Months and Years view at the bottom, and there's a filter button that allows you to hide screenshots and filter in other ways.

10:29 am: Space below the grid includes Collections, browse by topics like Time, People, Memories, Trips, and more.

10:29 am: Recent Days shows photos from the past few days, with things like photos of receipts filtered out automatically.

10:29 am: People and Pets now gathers photos of groups of people.

10:30 am: Collections can be reordered or pinned.

10:31 am: Swipe right from the grid and you'll see a new carousel with Featured Photos and other favorite collections. It automatically refreshes to surface new images.

10:31 am: RCS Messaging support, and larger icons on the Home Screen are coming too. And Reminders integration in Calendar.

10:31 am: Now moving to Audio & Home.

10:32 am: Starting with AirPods.

10:32 am: New ability to nod your head Yes, or shake your head No to interact with Siri silently.

10:32 am: If a call comes in, you can shake your head to refuse it without saying anything.

10:33 am: New Voice Isolation to AirPods Pro. Removes the background noise around you.

10:33 am: Personalized spatial audio is expanding to gaming, with new API for game developers.

10:33 am: Need For Speed Mobile will be one of the first titles with personalized spatial audio.

10:34 am: Now to Home and tvOS.

10:34 am: New feature coming to Apple TV+, InSight. When watching an Apple original show or movie, it can display what actors are currently on screen and what song is playing. Can be viewed on screen or on your iPhone.

10:35 am: Adding Enhanced Dialog with support for TV speakers and receivers, AirPods and other Bluetooth devices, uses machine learning to give greater vocal clarity to voices.

10:35 am: Subtitles will automatically appear if you mute the volume or go back a few seconds.

10:35 am: Adding support for 21:9 projectors to Apple TV.

10:36 am: Adding new Portraits category for screen savers, a new category for TV Shows and Movies screensavers with videos from Apple TV shows, and a new Snoopy screen saver..

10:37 am: A redesigned Apple Fitness+ experience is coming to tvOS, too. Now moving to watchOS. Things are moving quickly today.

10:37 am: watchOS 11 brings a new "training load" measurement to track intensity.

10:38 am: "A powerful new algorithm" uses heart rate, altitude, age and weight to give a calculation of your estimated effort rating. Effort rating and workout duration are used to calculate training load.

10:38 am: "We think training load will help enthusiasts and elite athletes get to the next level with data, insights and motivation they need to make the best decisions about their training."

10:39 am: New goals in activity rings can be adjusted by day of the week, or pause them for a rest day, and keep your awards streak going.

10:40 am: A new app will help give a deeper understanding of your body. These metrics power a new Vitals app, to give a check in of daily health status to give a look at your health stats and how they compare historically. Metrics will be highlighted when they're outside your typical range. If multiple metrics are out of range, you'll get a tailored message to see how they could be affecting you.

10:40 am: Cycle tracking now shows estimates of gestational age, showing pregnancy across charts to review things like high heart rate notification thresholds.

10:42 am: New widgets will be automatically added to the smart stack, with the translate app opening automatically when you go to a new country, or weather apps showing up when rain is imminent. Live Activities are coming to Apple Watch, too.

10:42 am: You can connect workouts to let a friend know that you've safely finished a late-night run and made it back home safely.

10:43 am: Improved Photos watch face gives more control and uses machine learning to automatically crop photos.

10:44 am: watchOS is done, flying back to Craig.

10:44 am: iPadOS is up next.

10:44 am: iPadOS 18! Includes the Home Screen updates, customized Control Center, and improved Photos app.

10:45 am: There's a new floating tab bar, letting you navigate to different parts of an app. The tab bar can morph into the side bar, and you can customize the tab bar to keep favorites within easy reach. Used in apps across the system, and there's a new document browser.

10:46 am: Refined animations across the UI. "Animations will feel even more responsive" and there are APIs for developers to integrate the new animations.

10:46 am: SharePlay includes tapping and drawing on the screen when screen sharing, and you can remotely control someone's iPad or iPhone.

10:47 am: Calculator is coming to iPad!

10:47 am: Includes history and unit conversions.

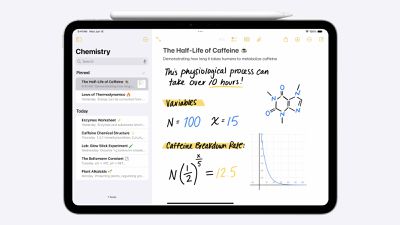

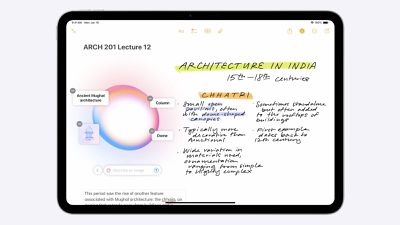

10:47 am: When used with Apple Pencil, there's a new Math Notes feature.

10:48 am: You can hand-write expressions. When you write an equals sign, it'll automatically calculate the answer and show the answer in handwriting like your own. Use basic or scientific math, and notes can be saved for later.

10:48 am: Can sum a list of numbers automatically by drawing a line beneath them.

10:49 am: Variables are supported, allowing you to solve algebraic equations. They can be changed and see results change in real time. Can even create a graph.

10:50 am: Notes has the same math capabilities from Calculator.

10:50 am: New Smart Script in Notes. Automatically improves your handwriting as you write, modifies your handwriting to make it more consistent. "It's still your own writing but it looks smoother, straighter, and more legible."

10:51 am: Can automatically move text to create more room, or scratch out words to erase them.

10:52 am: Craig is parkouring across the Apple Park campus to talk about macOS.

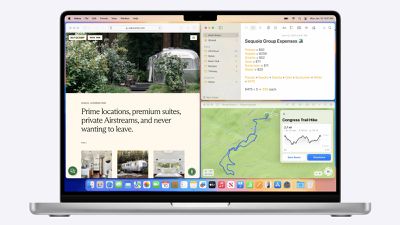

10:52 am: But what will macOS be named after? "Distracted briefly from their marathon hacky sack session..." macOS Sequoia.

10:54 am: Updates to Continuity. iPhone Mirroring allows you to view and control your iPhone from your Mac.

10:54 am: Your iPhone can be mirrored to your Mac, showing everything, including icons, the Home Screen, apps, etc. Any app can be opened direct from the Mac.

10:55 am: "We're bringing iPhone notifications to Mac, appearing alongside Mac notifications. And can interact with them."

10:55 am: "When I click on it, BAM, I'm taken right into the app on my iPhone."

10:55 am: My iPhone's audio comes through my Mac. You might be wondering what's on my iPhone's screen while I use iPhone mirroring... it stays locked, so nobody else can access it.

10:56 am: You can even drag and drop items and files from Mac to iPhone.

10:56 am: Automatically sizes windows to tile them and fill up the screen.

10:57 am: Presenter Preview mode for screen sharing, working with apps like FaceTime and Zoom. New automatic background replacement.

10:57 am: Building on Keychain to manage passwords.

10:57 am: New Passwords app, using wifi passwords, app and website passwords, verification codes and more.

10:58 am: Passwords sync across devices, and uses autofill to populate passwords in the Passwords app.

10:58 am: Supported on Windows with the iCloud for Windows app too.

10:59 am: Now, Safari in Sequoia. Talking up the new features added in the past few years. "If you missed anything we've added to Safari in the last few years, it's time to check it out."

10:59 am: New Highlights feature, using machine learning to highlight information for you as you browse, pulling information out of pages for you.

10:59 am: Find a hotel's location and phone number without needing to search through the entire page.

11:00 am: Includes summaries of articles within Reader.

11:00 am: New video viewer lets videos fill a browser window, still giving all controls and automatically moving to picture-in-picture of you click away.

11:00 am: Now moving to Gaming.

11:01 am: Talking about all the gaming support across the Apple platforms.

11:02 am: Game Porting Toolkit 2, allowing for more advanced games to Mac. Includes better Windows compatibility and new shader debugging tools. And allows games to move from Mac to iPad and iPhone more easily.

11:03 am: Ubisoft says Assassin's Creed Shadows is coming to Mac day and date with other platforms, on November 15.

11:04 am: Assassin's Creed Shadows is coming to iPad, too.

11:04 am: Other games are coming, too.

11:05 am: Apple Pay coming to third-party browsers and the same updates to Photos.

11:05 am: Developer betas are coming today, with public betas coming next month. All releases are coming to users this fall.

11:06 am: Tim is back, talking about how Apple has been using artificial intelligence and machine learning for years.

11:07 am: Now, talking about generative AI. "It has to be powerful enough to help with the things that matter most. It has to be intuitive and easy to use. It has to be deeply integrated into your product experiences. Most importantly, it has to be understand you and be grounded in your personal context... and of course, it has to be built with privacy from the ground up."

11:07 am: "It's personal intelligence and it's the next big step for Apple."

11:07 am: Introducing Apple Intelligence, the new personal intelligence system that makes your most personal products even more useful and delightful.

11:07 am: Craig: "This is a moment we've been working towards for a long time."

11:08 am: Chat tools "know very little about you and your needs."

11:08 am: Bringing "you intelligence that understands you. Puts powerful generative models right at the core of your iPhone, iPad, and Mac."

11:08 am: Deeply integrated into platforms and apps.

11:09 am: Going to talk about Apple Intelligence's capabilities, architecture, and experience.

11:09 am: Will allow the computer to understand languages, images, action, and personal context.

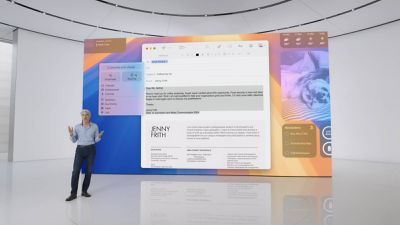

11:10 am: Deep natural language understanding, powers new writing tools to access system-wide. Allows you to rewrite or proofread text automatically across Mail, Notes, Safari, Pages, Keynote, and third-party apps.

11:11 am: Image capabilities, from photos to emoji to GIFs, it's fun to express yourself visually. Can now create new images. Personalize images for conversations, to wish a friend happy birthday, create an image of them surrounded by balloons and cake. Tell mom that she's your hero, and send an image of her in a superhero cape. Sketch images, illustration images, and animation image styles.

11:11 am: Apple Intelligence can take actions within apps.

11:11 am: "Pull up the files that Joz shared with me last week" or "show me all the photos of Mom, Olivia, and me" or "play the podcast that my wife sent me the other day."

11:11 am: Accomplish more while saving time.

11:12 am: Apple Intelligence is grounded in your personal information, accessing data from around your apps and what's on your screen. Suppose a meeting is being rescheduled for the afternoon, and I'm wondering if it's going to prevent me from getting to my daughter's performance on time. It can understand who my daughter is, the details she emailed several days ago, the schedule of my meeting, and the traffic between my office and the theater.

11:13 am: You should not have to hand over all the information from your life to someone's AI cloud.

11:13 am: "It's built with privacy at its core."

11:13 am: "On-device processing is aware of your personal data without collecting your personal data."

11:14 am: With advanced Apple Silicon, we have the computational foundation to power Apple Intelligence. Includes highly capable LLM and diffusion models. Can adapt on the fly to your current activity. Includes on-device semantic index to organize and surface information from across your apps.

11:14 am: Feeds data to the generative model to have context to assist you. Many models run entirely on device.

11:14 am: Sometimes you need more power. Servers can help with this, but servers can store your data, they might store or misuse your data and you can't verify that they're doing what they say. With Apple, you are in control of your data.

11:15 am: The privacy of your iPhone is extending into the cloud to unlock more intelligence. "Private Cloud Compute."

11:15 am: Allows Apple Intelligence to flex and scale computational capacity, and use server-based models, while protecting your privacy. Runs on servers using Apple silicon. Offer privacy and security of your iPhone from the silicon on up.

11:16 am: When you make a request, Apple Intelligence analyzes whether it can be accomplished on device. If it needs more compute capacity, it sends the data needed to fulfill the request. Data is not stored, and is used only for your requests.

11:17 am: 13 years ago, Siri was introduced. "We had an ambitious vision for it. We've been steadily building towards it."

11:18 am: Siri gets a new look. It's more deeply integrated into the experience. Includes richer understanding capabilities.

11:18 am: It allows you to make corrections in real-time and maintains conversational context.

11:19 am: You can now type to Siri.

11:19 am: Double-tap at the bottom of the screen to pop up a keyboard to type to Siri.

11:19 am: Siri will hold information about features and settings to help you do something on your iPhone, iPad, or Mac.

11:19 am: Describe a feature and Siri can find it for you.

11:20 am: More features rolling out over the next year.

11:20 am: Siri will eventually have on-screen awareness. You could have Siri add an address sent in a message to a contact.

11:21 am: Siri will be able to take actions in and across apps, including some that leverage image and writing capabilities. "Show me pictures of my friend Stacy in New York in her pink coat."

11:21 am: New App Intents framework and API to define actions within app.

11:22 am: Siri will be able to understand and take actions in more apps over time.

11:22 am: Includes a semantic index of photos, calendar events, and files. Includes things like concert tickets, links your friends have shared, and more.

11:22 am: Ask Siri to find something, when you can't remember if it was in an email, a text, or a shared note.

11:23 am: Siri will be able to find a photo of your drivers license, extract your ID number, and enter it into a form — automatically.

11:23 am: "Siri, when is my mom's flight landing?"

Accesses flight details from an email and gets up-to-date flight status.

11:24 am: "What are our lunch plans?"

"How long will it take to get there from the airport?"

11:24 am: Same Siri updates are coming to iPad and Mac, too.

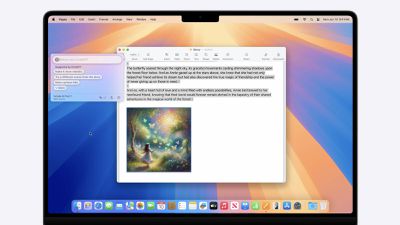

11:25 am: New feature called Rewrite... allows you to change the tone or proofread inline text in Mail and other apps.

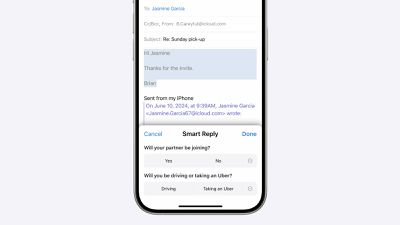

11:27 am: Smart Reply in Mail. You get suggested replies, identifying questions you were asked in a message. Incorporates your answers.

11:27 am: Instead of previewing the first few lines in an email, get summaries of emails in your inbox.

11:27 am: Can automatically surface important emails and put them at the top.

11:28 am: Automatically summarizes notifications, too. New Focus mode called "Reduce Interruptions" that automatically looks at notifications and messages to see if they're important enough to interrupt you.

11:29 am: In Messages, even with thousands of emoji to choose from, now we have "Genmoji" — create new emoji on device in the keyboard.

11:30 am: Can include genmoji that looks just like people in your photos.

11:30 am: New experience called Image Playground.

11:30 am: Choose from a range of concepts like themes, costumes, accessories, places, and more.

11:31 am: "No need to engineer the perfect prompt."

11:31 am: Previews are created on-device.

11:31 am: Can type descriptions and choose from Animation, Illustration, or Sketch.

11:33 am: Accessible in Messages, Pages, Freeform, and even a dedicated Image Playground app.

11:33 am: New Image Wand tool in notes. Can transform a rough sketch into a polished image.

11:33 am: Uses on-device intelligence to analyze a sketch and words and create an image.

11:34 am: Can remove and repair images.

11:34 am: Search photos for: "Maya skateboarding in a tie-dye shirt" or "Katie with stickers on her face".

11:35 am: Search videos too: "Maria cartwheeling on the grass."

11:35 am: Apple Intelligence can create memories with the story you wanted to see: "Leo learning to fish and making a big catch, set to a fishing tune."

11:37 am: Craig is back. Notes can record and transcribe audio. When your recording is finished, Apple Intelligence automatically generates a summary. Recording and summaries coming to phone calls too.

11:37 am: Included free with iOS 18 and Sequoia and iPadOS 18.

11:38 am: Integrating external models with experiences, including ChatGPT. Powered by GPT-4o. Support built into Siri.

11:38 am: Siri can determine if questions might be useful for ChatGPT to consider, and asks your permission to create it.

11:38 am: Can ask questions related to documents, photos, or PDF. Uses Compose to create content with ChatGPT for what you're writing about.

11:39 am: You'll be able to access ChatGPT for free, without creating an account. Requests are not logged. ChatGPT subscribers will be able to access paid features within the experiences.

11:39 am: Coming to iOS 18, iPadOS 18, and macOS Sequoia. Support for other AI models planned for the future.

11:40 am: SDK coming for Image Playground and Writing, plus enhancements coming to SiriKit.

11:41 am: More details coming later in the State of the Union, including what's coming to Xcode, with on-device code completion and smart assistance for Swift coding questions.

11:41 am: "AI for the rest of us."

11:42 am: Coming on iPhone 15 Pro, and iPad and Mac with M1 and later.

11:42 am: Coming this summer in US English for developers. Launching in beta as part of iOS 18, iPadOS 18, and macOS Sequoia this fall. Other languages and platforms coming later this year.

11:42 am: Apple Intelligence is coming system-wide. "I hope you're as excited as I am for the road ahead."

11:43 am: Tim is back. "It's been an exciting day of announcements."

11:43 am: All developer content from WWDC is available for free, online.

11:44 am: "Let's have a great WWDC!"

11:44 am: As we've seen previously, the video ends with: "Shot on iPhone, edited on Mac."

11:45 am: And after an hour and 45 minutes, we're wrapped! Stay tuned for more.